ButSpeak.com

News which Matters.

OpenPipe’s Mixture of Agents (MoA) model achieves state-of-the-art results and cost efficiency in AI, outperforming GPT-4 and revolutionizing synthetic data generation.

In artificial intelligence, the goal of achieving superior performance at a lower cost is a constant pursuit. OpenPipe has made significant strides with its innovative Mixture of Agents (MoA) model, designed to generate synthetic training data. This model demonstrates state-of-the-art (SOTA) results and offers a cost-effective alternative to existing models, particularly GPT-4.

OpenPipe’s MoA models have excelled in rigorous benchmarking tests, achieving notable scores on LMSYS’s Arena Hard Auto and AlpacaEval 2.0. The MoA model scored 84.8 on Arena Hard Auto and 68.4 on AlpacaEval 2.0, indicating its superior performance in generating high-quality synthetic data. These benchmarks represent challenging user queries that test the robustness and adaptability of AI models.

The MoA model has been benchmarked against various GPT-4 variants in real-world scenarios. Results showed that OpenPipe’s MoA model was preferred over GPT-4 in 59.5% of the tasks evaluated by Claude 3 Opus. This significant achievement highlights the model’s effectiveness and practical applicability in diverse tasks encountered by OpenPipe’s customers.

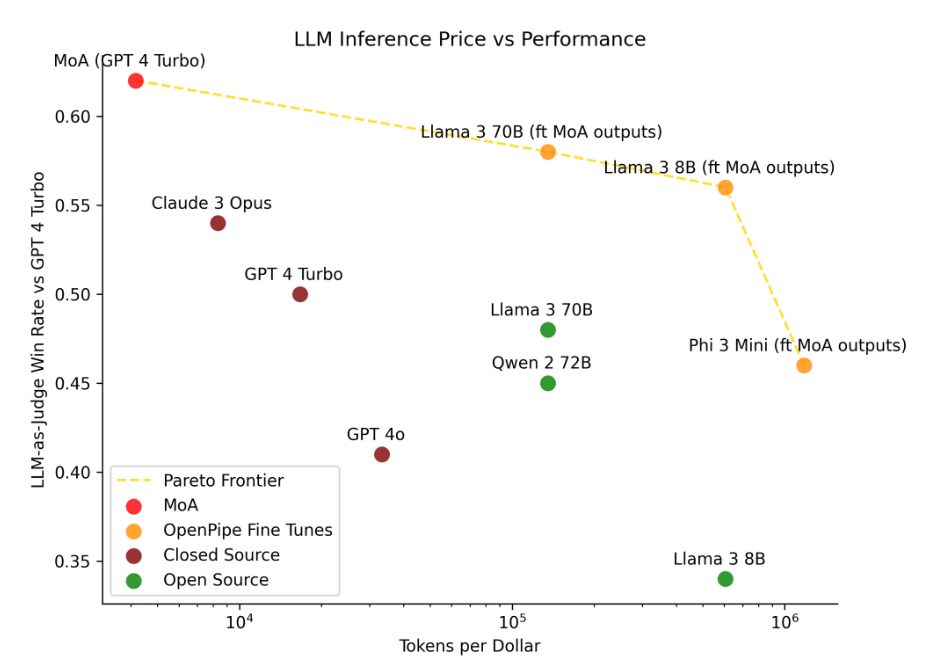

One of the standout features of the MoA model is its efficiency. OpenPipe has successfully fine-tuned smaller Llama 3 models using synthetic data generated by the MoA model. These fine-tuned models, such as Llama 3 70B and Llama 3 8B, have outperformed GPT-4 in multiple tasks. Remarkably, the Llama 3 8B model provides superior performance on three out of four functions at a fraction of the cost—25 times cheaper and three times faster to run compared to GPT-4.

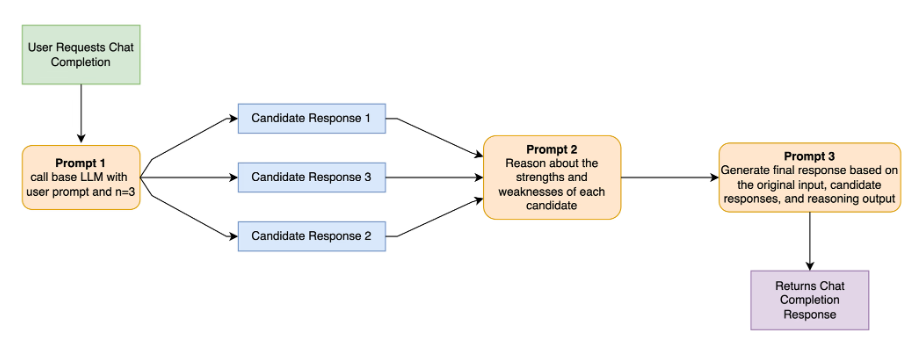

The MoA model’s design is a testament to OpenPipe’s innovative approach. It is a drop-in replacement for GPT-4, compatible with various base models, including GPT-4 Turbo and GPT-4o. The model employs a three-prompt chain to generate the completion: the first prompt generates three diverse candidate completions, the second critiques these completions, and the third combines the best elements of each to produce the final output. This structured approach ensures high-quality and diverse responses, enhancing the model’s performance.

OpenPipe has conducted extensive evaluations to validate the MoA model’s performance. In addition to automated benchmarks, they employed human evaluators to ensure the model’s outputs align with human judgment. This dual approach of using both LLM-as-judge and human evaluators has provided a comprehensive validation of the model, confirming its superiority over GPT-4 Turbo by a margin of 9%, even after adjustments for human preferences.

OpenPipe is committed to continuous improvement and plans to release enhanced variants of the MoA model incorporating new techniques and models. Currently, users can access these models through the OpenPipe platform by creating an account and using the OpenAI-compatible chat completions endpoint. This ease of access ensures that a wider audience can benefit from the advancements in synthetic data generation offered by OpenPipe.

OpenPipe’s Mixture of Agents model represents a significant advancement in AI, particularly in generating high-quality synthetic training data at a lower cost. Its superior performance, cost efficiency, and innovative design make it a valuable tool for AI practitioners looking to optimize their models. OpenPipe continues to refine and expand this technology, pushing synthetic data generation and model fine-tuning to new heights.