ButSpeak.com

News which Matters.

Finnish startup Flow Computing has unveiled a revolutionary chip that can potentially double CPU performance and increase it up to 100 times with further software optimization. This breakthrough could significantly impact AI technologies and autonomous vehicle systems, providing the computational power needed for these advanced applications.

Originating from Finland’s VTT Technical Research Centre, Flow Computing introduces the Parallel Processing Unit (PPU). Despite skepticism about these bold claims, Flow’s co-founder and CEO, Timo Valtonen, remains confident.

The PPU is a companion chip designed to optimize processing tasks in real-time, transforming traditional serial processing into more efficient parallel operations without additional power consumption or excessive heat. This technology is likened to expanding a CPU from a one-lane road to a multi-lane highway, dramatically enhancing efficiency and processing speed.

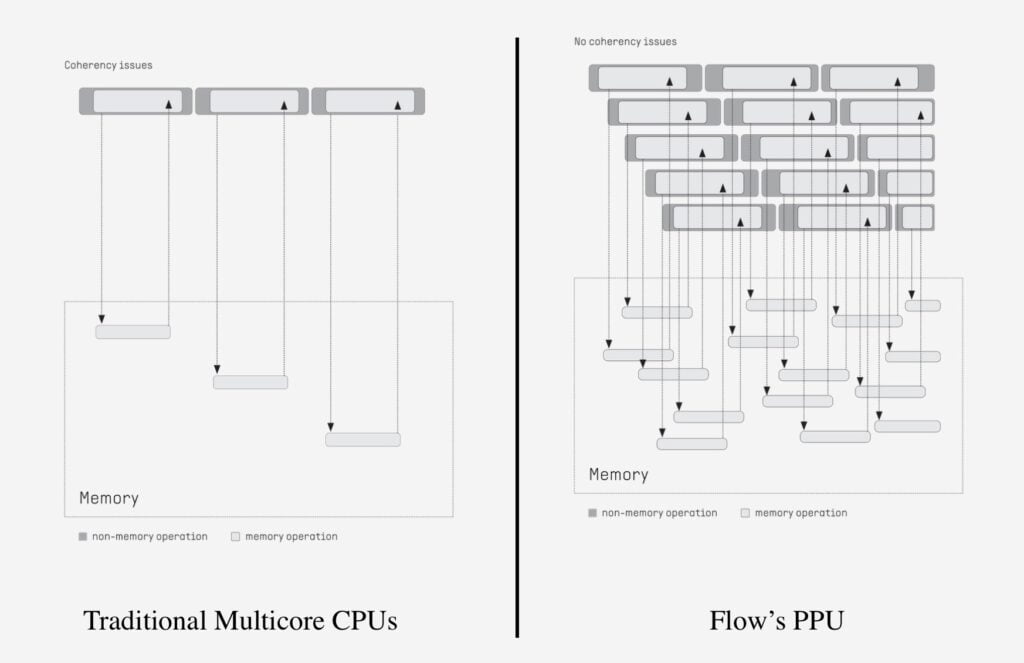

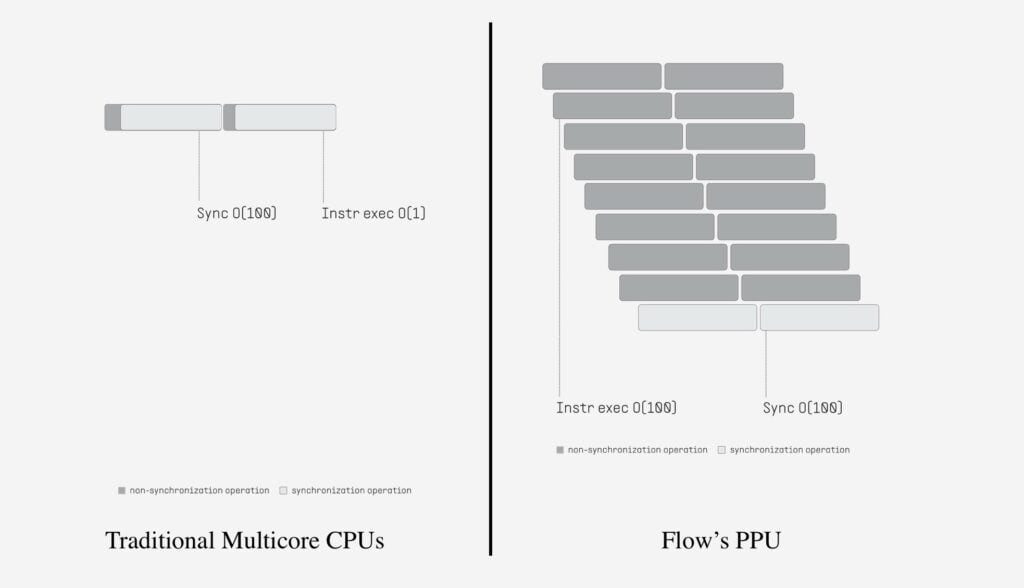

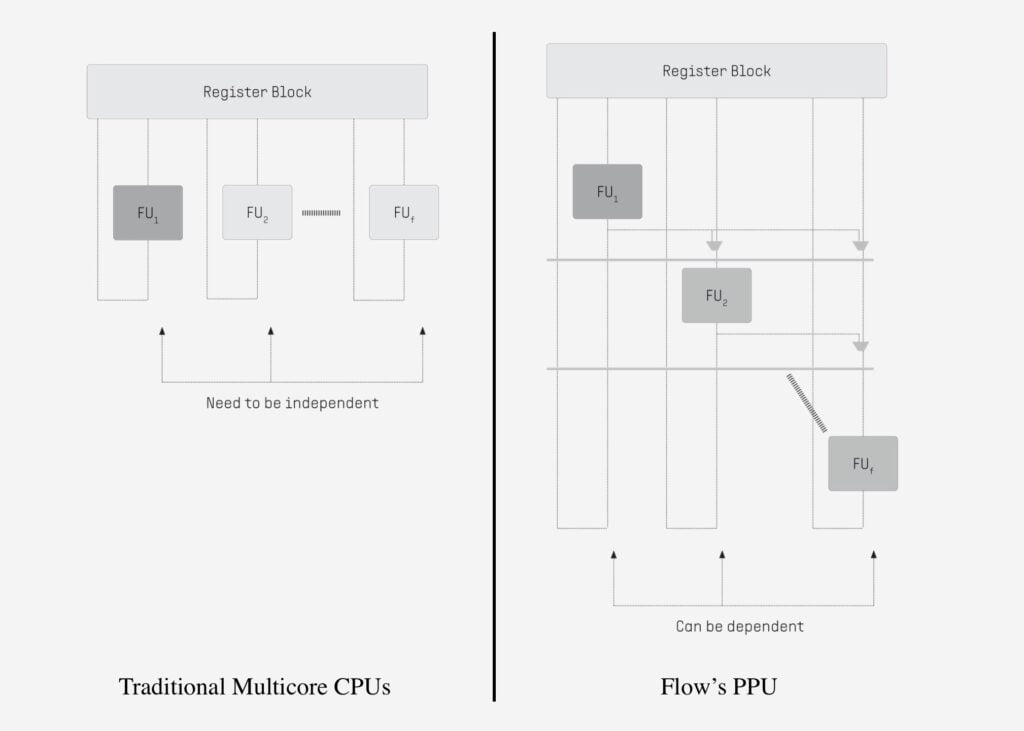

Flow’s PPU enhances CPU functionality by managing tasks at nanosecond intervals, allowing multiple processes to occur simultaneously. This innovation increases throughput without altering the CPU’s clock speed or architecture. However, the success of this technology hinges on its adoption by chip manufacturers, requiring integration at the design stage which could disrupt current production methods. Despite these challenges, the minimal modifications needed and the potential for substantial performance improvements make it an attractive option for chipmakers facing growing computational demands.

Flow Computing has demonstrated the effectiveness of its technology in FPGA-based tests. With €4 million in initial funding and backing from several venture capital firms, the startup is now seeking industry partnerships to further develop and scale its solution.

At the heart of Flow’s groundbreaking technology is its patented Parallel Processing Unit (PPU) architecture and versatile compiler ecosystem. The PPU can seamlessly integrate into any design architecture or process geometry, providing immediate performance boosts for future CPUs and eliminating the need for costly GPU acceleration.

Flow’s technology significantly enhances not only individual devices but also embedded systems and data centers. Its applications span edge and cloud computing, AI clouds, multimedia processing over 5G/6G networks, autonomous vehicles, and military-grade computing, setting new standards for CPU capabilities.

The PPU is an IP block that integrates tightly with the CPU, designed for high configurability across various use cases. Customization options include the number of cores, functional units, and on-chip memory resources. Minimal CPU modifications are required, leveraging substantial performance gains through the PPU interface integration with the CPU’s instruction set.

Flow’s PPU addresses critical challenges around CPU latency, synchronization, and virtual level parallelism:

Flow’s technology is fully backward compatible, enhancing all existing legacy software and applications. The PPU’s compiler identifies and executes parallel code segments in PPU cores, and Flow is developing an AI tool to help developers optimize code for maximum performance.

Investments in compute accelerators have transformed customer capabilities, yet general-purpose compute remains critical. Enhancing CPU performance is essential to maximize performance, reduce costs, and meet sustainability goals. Without improvements in general-purpose compute, infrastructure capabilities will be limited despite advances in accelerators.